Last year I wrote about an interesting number of new applications of facial recognition in healthcare. They use smart technology to detect things like your heart rate, stress levels and various other potential health issues from a live video feed.

Last year I wrote about an interesting number of new applications of facial recognition in healthcare. They use smart technology to detect things like your heart rate, stress levels and various other potential health issues from a live video feed.

An interesting offshoot of such projects was published recently by researchers at the University of Cambridge. They’ve developed an AI system that monitors the facial expressions of sheep to test for signs of pain and distress. They believe their system could be used to improve sheep welfare, and could also be applied to other animals.

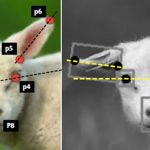

The system detects distinct parts of the sheep’s face, before comparing what it sees with standardized measurement tools used by vets to diagnose pain. As with so many AI based tools, the key is to provide early diagnoses to help with effective treatment, whether it’s of foot rot, mastitis or one of many other conditions sheep often suffer with.

Facial expressions

At the heart of the system is the Sheep Pain Facial Expression Scale (SPFES), which was developed by the team to detect pain in sheep with high levels of accuracy. In order to make effective use of the tool, they bypassed teaching humans to use it, and went direct to machines.

SPFES suggests that there are five key signs that a sheep is in pain: their eyes narrow, their cheeks tighten, their ears fold forwards, their lips pull down and back, and their nostrils change from a U shape to a V shape. The SPFES then ranks these characteristics on a scale of one to 10 to measure the severity of the pain.

“The interesting part is that you can see a clear analogy between these actions in the sheep’s faces and similar facial actions in humans when they are in pain – there is a similarity in terms of the muscles in their faces and in our faces,” the authors say. “However, it is difficult to ‘normalise’ a sheep’s face in a machine learning model. A sheep’s face is totally different in profile than looking straight on, and you can’t really tell a sheep how to pose.”

The machine learning model was trained using several hundred photographs of sheep that had been provided by vets during the course of treatment. Each photo was tagged according to the pain levels the sheep was experiencing.

In early tests, the model was shown to be around 80% accurate, so progress is still clearly required, with the team hoping to utilize larger datasets to train the algorithm more intensely. They also hope to advance the system so that it can work in real-time on moving images, whilst also being effective when the sheep isn’t looking directly at the camera. If this kind of capability is added, it should be possible to have cameras positioned in various places and monitor the sheep in real-time.