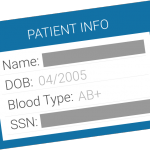

I’ve written numerous times on the tremendous potential of health data to transform both medical research and practice, but privacy concerns remain considerable. Much of the discussion around the security of medical data is our ability to de-identify data, thus making the ‘good stuff’ available whilst not denying privacy to the patient themselves.

I’ve written numerous times on the tremendous potential of health data to transform both medical research and practice, but privacy concerns remain considerable. Much of the discussion around the security of medical data is our ability to de-identify data, thus making the ‘good stuff’ available whilst not denying privacy to the patient themselves.

The concept of de-identification is a simple one, and aims to ensure that information and identity are decoupled. Except a recent study suggests that things might not be so straightforward. The authors found that patients can be relatively easily re-identified, usually without decryption.

The process used by the team involves linking the unencrypted parts of their record with information that is known about the individual. The results are similar to other studies, all of which show that seemingly mundane facts can be enough to isolate an individual, with a decrease in the precision of the data can reduce the risk of re-identification, albeit at the cost of the data’s utility.

The uniqueness of patients

The first step in re-identifying someone is to understand their uniqueness. The researchers chose childbirth as a simple and common medical procedure to test their hypothesis on. Many people will be easily identifiable from public information, such as their date of birth or the date of delivery. The researchers were able to find unique records for several high profile Australians using this approach alone.

Suffice to say, this is a relatively blunt instrument, but the researchers were then able to cross-reference this initial dataset with a second showing population-wide billing frequencies. This can help to highlight whether a patient is unique in the population.

This then feeds into the second step, which examines the uniqueness of the individual according to characteristics from commercial datasets that may or may not be available directly, such as a pharmaceutical company database. This means that pharma companies, your bank or insurer might be able to easily re-identify you using the data they own.

Whilst there is no guarantee of re-identification using these methods, it certainly provides the opportunity for re-identification. When put into this context, it does beg the question of how valuable claims by companies such as DeepMind are when they trumpet the de-identification of data used to feed their algorithms.

What can we do?

As I’ve said many times, I do think the sharing of medical data can deliver huge advances in both treatment and research, so how can we ensure that data is shared whilst privacy maintained?

The authors are not optimistic. They suggest that most de-identification methods are bound to fail, due in large part to the inherent inconsistencies of their aims. It’s incredibly difficult to both maintain privacy and publish detailed individual records.

“Some data can be safely published online, such as information about government, aggregations of large collections of material, or data that is differentially private,” they say. “For sensitive, complex data about individuals, a much more controlled release in a secure research environment is a better solution.”

There have been various recommendations, such as the ‘trusted user’ model, whilst dynamic consent aims to give individuals greater control over their data and how it’s used. There has even been a proposal by the Australian government to criminalize re-identification of data. Of course, that places the emphasis on the re-identification process rather than the poor de-identification in the first place and rather attempts to hide the problem than solve it.

“Our hope is that this research contributes to a fair, open, scientific and constructive discussion about open data sharing and patient privacy,” the authors conclude.